Determining rlimit (ulimit) values for a running process

Question

How can I find out what limits are set for a currently running process?

Answer

The easiest way is to download the pdump.sh script and run it against the process. The pdump tool can be downloaded from here:

ftp://ftp.software.ibm.com/aix/tools/debug/

There is no installation needed, only to change the permissions of the file so it can be executed:

$ chmod +x pdump.sh

Then run it against the process-id (PID) of the process you wish to examine. The pdump.sh script will create an output file containing information regarding that process.

# ./pdump.sh 3408030

The output file will contain the name of the process, the PID and the current date. For example:

pdump.tier1slp.3408030.13May2015-11.18.13.out

This is an ASCII text file and can be inspected with "more" or "view".

Determining the limit values

Limits in a process are kept in the user area or "uarea" of the process memory. This section in the pdump output starts with the title "Resource limits:"

Resource limits:

fsblimit....00000000001FFFFF

rlimit[CPU]........... cur 7FFFFFFF max 7FFFFFFF

saved_rlimit[CPU]..... cur 7FFFFFFFFFFFFFFF max 7FFFFFFFFFFFFFFF

rlimit_flag[CPU]...... cur INF max INF

rlimit[FSIZE]......... cur 3FFFFE00 max 3FFFFE00

saved_rlimit[FSIZE]... cur 000000003FFFFE00 max 000000003FFFFE00

rlimit_flag[FSIZE].... cur SML max SML

rlimit[DATA].......... cur 08000000 max 7FFFFFFF

saved_rlimit[DATA].... cur 0000000008000000 max 7FFFFFFFFFFFFFFF

rlimit_flag[DATA]..... cur SML max INF

rlimit[STACK]......... cur 02000000 max 7FFFFFFF

saved_rlimit[STACK]... cur 0000000002000000 max 0000000100000000

rlimit_flag[STACK].... cur SML max MAX

rlimit[CORE].......... cur 3FFFFE00 max 7FFFFFFF

saved_rlimit[CORE].... cur 000000003FFFFE00 max 7FFFFFFFFFFFFFFF

rlimit_flag[CORE]..... cur SML max INF

rlimit[RSS]........... cur 02000000 max 7FFFFFFF

saved_rlimit[RSS]..... cur 0000000002000000 max 7FFFFFFFFFFFFFFF

rlimit_flag[RSS]...... cur SML max INF

rlimit[AS]............ cur 7FFFFFFF max 7FFFFFFF

saved_rlimit[AS]...... cur 0000000000000000 max 0000000000000000

rlimit_flag[AS]....... cur INF max INF

rlimit[NOFILE]........ cur 000007D0 max 7FFFFFFF

saved_rlimit[NOFILE].. cur 00000000000007D0 max 7FFFFFFFFFFFFFFF

rlimit_flag[NOFILE]... cur SML max INF

rlimit[THREADS]....... cur 7FFFFFFF max 7FFFFFFF

saved_rlimit[THREADS]. cur 0000000000000000 max 0000000000000000

rlimit_flag[THREADS].. cur INF max INF

rlimit[NPROC]......... cur 7FFFFFFF max 7FFFFFFF

saved_rlimit[NPROC]... cur 0000000000000000 max 0000000000000000

rlimit_flag[NPROC].... cur INF max INF

The resource limit for each ulimit value is represented here. As values could be either 32-bit or 64-bit, the include file /usr/include/sys/user.h tells us how to read them:

/*

* To maximize compatibility with old kernel code, a 32-bit

* representation of each resource limit is maintained in U_rlimit.

* Should the limit require a 64-bit representation, the U_rlimit

* value is set to RLIM_INFINITY, with actual 64-bit limit being

* stored in U_saved_rlimit. These flags indicate what

* the real situation is:

*

* RLFLAG_SML => limit correctly represented in 32-bit U_rlimit

* RLFLAG_INF => limit is infinite

* RLFLAG_MAX => limit is in 64_bit U_saved_rlimit.rlim_max

* RLFLAG_CUR => limit is in 64_bit U_saved_rlimit.rlim_cur

So using this and our pdump output, we can view the value of NOFILE for example:

rlimit[NOFILE]........ cur 000007D0 max 7FFFFFFF

saved_rlimit[NOFILE].. cur 00000000000007D0 max 7FFFFFFFFFFFFFFF

rlimit_flag[NOFILE]... cur SML max INF

The rlimit_flag for NOFILE is set to SML, so the value is a 32-bit integer, and is stored in the rlimit.cur variable.

0x7d0 = 2000 decimal, so the limit for that user, picked up by the process when it started, is 2000.

Source : IBM Technote

Common EFS Errors and Solutions

Question

This document is a collection of errors encountered when using EFS and solutions to those issues.

Answer

1) Problem: Can't enable EFS on the system

# efsenable -a

/usr/lib/drivers/crypto/clickext: A file or directory in the path name does not exist.

Unable to load CLiC kernel extension. Please check your installation.

Solution:

Install CLiC filesets from AIX Expansion Pack CD

$ installp -l -d clic.rte

Fileset Name Level I/U Q Content

====================================================================

clic.rte.includes 4.3.0.0 I N usr

# CryptoLite for C Library Include File

clic.rte.kernext 4.3.0.0 I N usr,root

# CryptoLite for C Kernel

clic.rte.lib 4.3.0.0 I N usr

# CryptoLite for C Library

2) Problem: Can't enable EFS on the system

# efsenable -a

Unable to load CLiC kernel extension. Please check your installation.

(Please make sure latest version of clic.rte is installed.)

Double-check that you have installed the correct version of the CLIC filesets for your Technology Level of AIX.

For AIX 6100-01 use clic.rte.4.3.0.0.I on the Expansion Pack CD

For aix 6100-02 use clic.rte.4.5.0.0.I on the Expansion Pack CD

AIX 6100-03 has been updated to include clic.rte on the base media set to prevent boot issues on systems with EFS enabled. Use clic.rte.4.6.0.1.I

For AIX 6100-04 use clic.rte.4.7.0.0.I which is also included in the base OS media.

2) Problem: Can't view user's key:

$ efskeymgr -v

Problem initializing EFS framework.

Please check EFS is installed and enabled (see efsenable) on you system.

Error was: (EFS was not configured)

Solution:

Enable EFS on the system:

# efsenable -a

and give root's password when it asks for root's initial keystore.

3) Problem: Can't enable encryption inheritiance on a directory.

# efsmgr -E testdir

or

Can't enable encryption on a specific file

# efsmgr -e myfile

Problem initializing EFS framework.

Please check EFS is installed and enabled on you system.

Error was: (EFS was not configured)

Solution:

Make sure CLiC filesets are installed

Enable EFS on the system

Enable EFS and RBAC on the filesystem:

# chfs -a efs=yes /myfilesystem

4) Problem: Have enabled EFS on a filesystem but get error mounting:

# mount /efstest

The CLiC library (libclic.a) is not available. Install clic.rte and run 'efsenable -a'.

Solution:

Install CLiC filesets

Enable EFS on the system

Remount the filesystem

5) Problem: No encryption algorithms show up!

# efsenable -q

List of supported algorithms for keystores:

1

2

3

List of supported ciphers for files:

1

2

3

4

5

6

Solution:

Install CLiC filesets

# efsenable -q

List of supported algorithms for keystores:

1 RSA_1024

2 RSA_2048

3 RSA_4096

List of supported ciphers for files:

1 AES_128_CBC

2 AES_192_CBC

3 AES_256_CBC

4 AES_128_ECB

5 AES_192_ECB

6 AES_256_ECB

Source: IBM Technote

How to check for memory over-commitment in AME

Question

In LPARs that utilize the Power 7 (and later) feature of Active Memory Expansion (AME), assessing memory resources is a more complex task compared with dedicated memory systems. How can the memory in such a system be evaluated?

Answer

Introduction

Active Memory Expansion (AME) allows for the compression of memory pages to increase the system's effective virtual address space. At high usage, unused computational memory is moved to the compressed pool instead of being paged-out to paging space. This is typically employed in environments with excess CPU resources and are somewhat constrained on physical memory. Active Memory Expansion is feature that has been introduced in POWER7/POWER7+ systems with a minimum level of AIX 6.1 TL4 SP2.

AME Scenarios

After planning and configuring the system with the amepat tool, there are some scenarios that might require a change of AME configuration:

- Virtual memory exceeds Target Memory Expansion Size

- Virtual memory exceeds assigned physical memory and less than Target Memory Expansion Size (with no deficit)

- Virtual memory exceeds assigned physical memory and less than Target Memory Expansion Size (with deficit)

- Virtual memory is below assigned physical memory

When this scenario is present, the system is over-committed and will start paging out to disk. From a configuration stand-point, rerun the amepat tool to either increase the Expansion Factor or to increase the size of physical memory.

This is the ideal scenario when using AME as the compressed pool is able to satisfy the memory demands of the LPAR.

When the system is unable to compress memory pages to meet the Target Memory Expansion Size, there will be a deficit and pages that exceed the allocated memory are moved to paging space. Not all memory pages are subject to compression (pinned pages or client pages) and therefore, a deficit is present. Rerun the amepat tool to either decrease the Expansion Factor or to increase the size of physical memory.

While there isn't a problem with over-commitment with this setup, it is not benefiting from AME. Rerun the amepat tool to decrease the allocated physical memory and evaluate the current Expansion Factor.

Tools to use with a live example

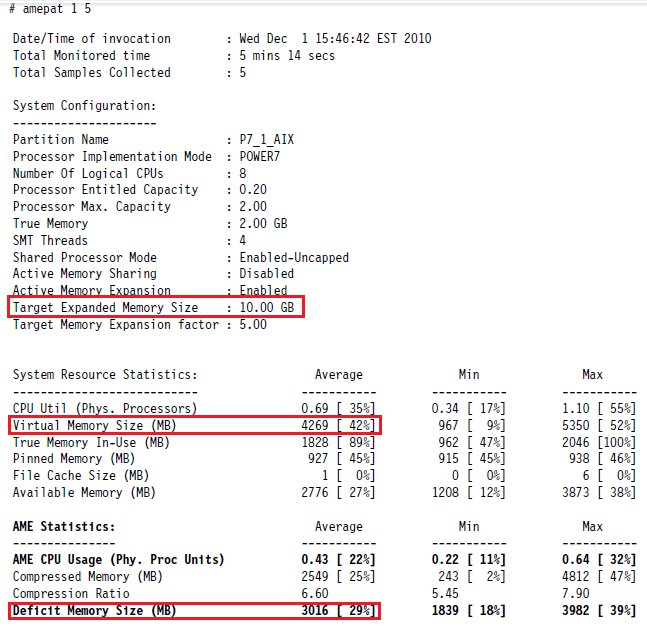

The following tools on AIX can be used to determine the current status of an AME-enabled LPAR (with a live example from the IBM Redbook IBM PowerVM Virtualization Managing and Monitoring):

# amepat

- Comparing the Virtual Memory Size (MB) to the Target Expanded Memory Size, we find that the system is not over-committed logically.

- Due to the Deficit Memory Size (MB), the system will start utilizing paging space due to the inability to compress more memory.

# vmstat -c

Comparing the avm value (in 4k pages) to the tmem value (MB) will tell us if the system is logically over-committed.

- Observing the dxm will show us the deficit in 4k pages.

# svmon -O summary=AME

Comparing the virtual column to the size column shows no issue with logical memory over-commitment.

- The dxm column shows the deficit in 4k pages

For more information regarding AME, please refer to the IBM Redbook IBM PowerVM Virtualization Managing and Monitoring (sg247590):

http://www.redbooks.ibm.com/abstracts/sg247590.html

Source: IBM Technote

IBM AIX – From Strength to Strength – 2014

Un document intéressant qui résume les fonctionnalités et supports par version d'AIX, Vitual I/O Server et autres produits pour IBM POWER.

Lien permanent :

http://public.dhe.ibm.com/common/ssi/ecm/en/poo03022usen/POO03022USEN.PDF

Thank you Jay.

repair AIX IPL hang at LED value 518

Pour réparer un serveur AIX figé sur la LED 518 vous pouvez suivre la Technote IBM :

http://www-01.ibm.com/support/docview.wss?uid=isg3T1000131

Dans mon cas cela n'a pas suffit car le LVCB de hd2 était corrompu:

- hd2 LVCB corrompu

- /etc/filesystems corrompu pour hd2

- ODM corrompu dans CuAt

Démarrer en Maintenance mode via un DVD AIX, NIM ou une mksysb (Tape, DVD, ISO).

Choisissez l'Option 3 puis Access rootvg volume groupe, identifier le disque contenant les LV système (hd4, hd2 ...)

Installation and Maintenance

Type the number of your choice and press Enter. Choice is indicated by >>>.

1 Start Install Now with Default Settings

2 Change/Show Installation Settings and Install

=> 3 Start Maintenance Mode for System Recovery

4 Make Additional Disks Available

5 Select Storage Adapters

Maintenance

Type the number of your choice and press Enter.

=> 1 Access a Root Volume Group

Type the number of your choice and press Enter.

0 Continue

Access a Root Volume Group

Type the number for a volume group to display the logical volume information

and press Enter.

1) Volume Group 00c8502e00004c0000000145e1f142ed contains these disks:

hdisk0 10240 vscsi

Volume Group Information

------------------------------------------------------------------------------

Volume Group ID 00c8502e00004c0000000145e1f142ed includes the following

logical volumes:

hd5 hd6 hd8 hd4 hd2 hd9var

hd3 hd1 hd10opt hd11admin livedump

------------------------------------------------------------------------------

Choisir l'option 2 (Access this Volume Group and start a shell before mounting filesystems)

1) Access this Volume Group and start a shell

=> 2) Access this Volume Group and start a shell before mounting filesystems

Pendant l'import du VG rootvg on constate un message pas commun.

rootvg

Could not find "/" and/or "/usr" filesystems.

Exiting to shell.

Checker les filesystems et formater le Log device.

# fsck -y /dev/hd2

# fsck -y /dev/hd9var

# fsck -y /dev/hd3

# fsck -y /dev/hd1

# logform /dev/hd8

logform: destroy /dev/rhd8 (y)?y

Afficher le contenu du "Logical Volume Control Block" des LVs

On constate que le Label du LVCB de hd2 est corrompu

AIX LVCB

intrapolicy = c

copies = 1

interpolicy = m

lvid = 00c8502e00004c0000000145e1f142ed.5

lvname = hd2

label = /usr/!+or

machine id = 8502E4C00

number lps = 165

relocatable = y

strict = y

stripe width = 0

stripe size in exponent = 0

type = jfs2

upperbound = 32

fs = vfs=jfs2:log=/dev/hd8

time created = Fri May 9 17:04:23 2014

time modified = Tue Nov 4 13:19:18 2014

Corriger le Label de hd2 via la commande putlvcb puis vérifier

# getlvcb hd2 -AT

AIX LVCB

intrapolicy = c

copies = 1

interpolicy = m

lvid = 00c8502e00004c0000000145e1f142ed.5

lvname = hd2

label = /usr

machine id = 8502E4C00

number lps = 165

relocatable = y

strict = y

stripe width = 0

stripe size in exponent = 0

type = jfs2

upperbound = 32

fs = vfs=jfs2:log=/dev/hd8

time created = Fri May 9 17:04:23 2014

time modified = Tue Nov 4 13:23:00 2014

A ce stade on ne peut pas se "chrooter" dans le disque système rootvg car le VG à été importé avec une valeur corrompue pour hd2 (/usr), Obligé de redémarrer en Maintenance mode

Choisissez l'option 2 et vérifier que l'erreur précédente ne s'affiche plus.

1) Access this Volume Group and start a shell

2) Access this Volume Group and start a shell before mounting filesystems

99) Previous Menu

Choice [99]: 2

Importing Volume Group...

rootvg

Checking the / filesystem.

The current volume is: /dev/hd4

Primary superblock is valid.

Checking the /usr filesystem.

The current volume is: /dev/hd2

Primary superblock is valid.

Exit from this shell to continue the process of accessing the root

volume group.

Pour ce "chrooter" dans le disque rootvg et monter les filesystems taper "exit"

# df

Filesystem 512-blocks Free %Used Iused %Iused Mounted on

/dev/ram0 720896 319192 56% 11797 22% /

/proc 720896 319192 56% 11797 22% /proc

/dev/cd0 - - - - - /SPOT

/dev/hd4 720896 319192 56% 11797 22% /

/dev/hd2 5406720 638528 89% 54526 39% /usr

/dev/hd3 294912 219096 26% 88 1% /tmp

/dev/hd9var 1015808 303160 71% 8987 17% /var

/dev/hd10opt 1015808 484192 53% 8860 13% /opt

On constate que le label /usr est corrompu

rootvg:

LV NAME TYPE LPs PPs PVs LV STATE MOUNT POINT

hd5 boot 2 2 1 closed/syncd N/A

hd6 paging 32 32 1 open/syncd N/A

hd8 jfs2log 1 1 1 open/syncd N/A

hd4 jfs2 22 22 1 open/syncd /

hd2 jfs2 165 165 1 open/syncd /usr/!+or

hd9var jfs2 31 31 1 open/syncd /var

hd3 jfs2 9 9 1 open/syncd /tmp

hd1 jfs2 1 1 1 closed/syncd /home

hd10opt jfs2 31 31 1 open/syncd /opt

hd11admin jfs2 8 8 1 closed/syncd /admin

livedump jfs2 16 16 1 closed/syncd /var/adm/ras/livedump

# grep -p hd2 /etc/filesystems

/usr/!+or:

dev = /dev/hd2

vfs = jfs2

log = /dev/hd8

mount = automatic

check = false

type = bootfs

vol = /usr

free = false

# odmget -q 'name=hd2 and attribute=label' CuAt

CuAt:

name = "hd2"

attribute = "label"

value = "/usr/!+or"

type = "R"

generic = "DU"

rep = "s"

nls_index = 640

Corriger les corruptions de l'ODM et du fichier /etc/filesystems

exporter la valeur ODM corrompu (hd2 + label) dans un fichier puis éditer et corriger le fichier

# export TERM=vt320

# export VISUAL=vi

# set -o vi

# vi /tmp/odm

CuAt:

name = "hd2"

attribute = "label"

value = "/usr"

type = "R"

generic = "DU"

rep = "s"

nls_index = 640

Sauvegarder la classe ODM CuAt puis supprimer la valeur corrompue de la classe ODM CuAt

# odmdelete -q 'name=hd2 and attribute=label' -o CuAt

0518-307 odmdelete: 1 objects deleted.

Ajouter la nouvelle valeur à partir du fichier et vérifier l'ODM CuAt

# odmget -q 'name=hd2 and attribute=label' CuAt

CuAt:

name = "hd2"

attribute = "label"

value = "/usr"

type = "R"

generic = "DU"

rep = "s"

nls_index = 640

Sauvegarder l'ODM dans le Boot Logical Volume (hd5)

saving to '/dev/hd5'

47 CuDv objects to be saved

120 CuAt objects to be saved

14 CuDep objects to be saved

8 CuVPD objects to be saved

356 CuDvDr objects to be saved

2 CuPath objects to be saved

0 CuPathAt objects to be saved

0 CuData objects to be saved

0 CuAtDef objects to be saved

Number of bytes of data to save = 19005

Compressing data

Compressed data size is = 6850

bi_start = 0x3600

bi_size = 0x1b20000

bd_size = 0x1b00000

ram FS start = 0x9363b0

ram FS size = 0x114bc17

sba_start = 0x1b03600

sba_size = 0x20000

sbd_size = 0x1ac6

Checking boot image size:

new save base byte cnt = 0x1ac6

Wrote 6854 bytes

Successful completion

Éditer et modifier le fichier /etc/filesystems pour /usr puis contrôler

# vi /etc/filesystems

# grep -p hd2 /etc/filesystems

/usr:

dev = /dev/hd2

vfs = jfs2

log = /dev/hd8

mount = automatic

check = false

type = bootfs

vol = /usr

free = false

Enfin synchroniser la mémoire sur les filesystems et redémarrer.

Creating NIM resources on an NFS shared NAS device

You can use a network-attached storage (NAS) device to store your Network Installation Management (NIM) resources by using the nas_filer resource server.

NIM support allows the hosting of file-type resources (such as mksysb, savevg, resolv_conf, bosinst_data, and script) on a NAS device. The resources can be defined in the NIM server database, and can be used for installation without changing any network information or configuration definitions on the Shared Product Option Tree (SPOT) server.

The nas_filer resource server is available in the NIM environment, and requires an interface attribute and a password file. You must manually define export rules and perform storage and disk management before you use any NIM operations.

To create resources on a NAS device by using the nas_filer resource server, complete the following steps:

Define the nas_filer object. You can enter a command similar to the following example:

Define a mksysb file that exists on the NAS device as a NIM resource. You can enter a command similar to the following example:

Optional:

If necessary, create a new resource (client backup) on the NAS device. You can use the following command to create a mksysb resource:

Optional:

If necessary, copy an existing NIM resource to the nas_filer object. You can use the following command to copy a mksysb resource.

SOURCE: IBM Knowledge Center

Adding a nas_filer management object to the NIM environment

Follow the instructions to add a nas_filer management object.

If you define resources on a network-attached storage (NAS) device by using the nas_filer management object, you can use those resources without changing the network information and configuration definition changes on the Shared Product Object Tree (SPOT) server. To add a nas_filer object, the dsm.core fileset must be installed on the NIM master.

To add a nas_filer object from the command line, complete the following steps:

Create an encrypted password file that contains the login ID and related password on the NIM master to access the nas_filer object. The encrypted password file must be created by using the dpasswd command from the dsm.core fileset. If you do not want the password to be displayed in clear text, exclude the -P parameter. The dpasswd command prompts for the password. Use the following command as an example:

Pass the encrypted password file in the passwd_file attribute by using the define command of the nas_filer object. Use the following command as an example:

-a if1=InterfaceDescription \

-a net_definition=DefinitionName \

nas_filerName

If the network object that describes the network mask and the gateway that is used by the nas_filer object does not exist, use the net_definition attribute. After you remove the nas_filer objects, the file that is specified by the passwd_file attribute must be removed manually.

Example

To add a nas_filer object that has the host name nf1 and the following configuration:

host name=nf1

password file path=/etc/ibm/sysmgt/dsm/config/nf1

network type=ethernet

subnet mask=255.255.240.0

default gateway=gw1

default gateway used by NIM master=gw_maste, enter the following command:

-a if1="find_net nf1 0" \

-a net_definition="ent 255.255.240.0 gw1 gw_master" nf1

For more information about adding a nas_filer object, see the technical note that is included in the dsm.core fileset (/opt/ibm/sysmgt/dsm/doc/dsm_tech_note.pdf).

Daily Saving Time problem on AIX 7.1 and AIX 6.1

System time may not change properly at DST start/end dates on AIX 7.1 and AIX 6.1

AIX systems or applications that use the POSIX time zone format may not change time properly at Daylight Savings Time start or end dates. Applications that use the AIX date command, or time functions such as localtime() and ctime(), on these systems may be affected.

This problem is exposed on your system if you have both of these underlying conditions:

1. Your system is at one of the affected AIX levels (listed below)

2. Your system is using a POSIX format time zone and the system or an application on the system is using a custom DST setting.

Read this technote to check if you are exposed.

Possible Action Required:

http://www-01.ibm.com/support/docview.wss?uid=isg3T1013017

Verify and test that a UDP port is open

Problem(Abstract)

How to verify that a UDP port is open and how to test that the port is working for a third party application.

Resolving the problem

Command to verify that a port is open to receive incoming connections.

#netstat -an |grep <port number>

Example: tftp uses port 69 to transfer data

#netstat -an |grep .69

Proto Recv-Q Send-Q Local Address Foreign Address (state)

udp 0 0 *.69 *.*

To capture the udp packets to prove that a specific port is being used you can either run the tcpdump command or the iptrace command.

#tcpdump "port #" (where # is the number of the port you are testing)

or

#startsrc -s iptrace -a "-a -p # /tmp/udp.port" (where # is the number of the port you are testing)

#stopsrc -s iptrace (stop iptrace command)

#ipreport -rnsC /tmp/udp.port /tmp/udp.port.out (format the iptrace binary to a text readable format)

Example: Start the packet capture.

#tcpdump 'port 69'

Then use tftp to transfer a file. This is an example of transferring the /etc/motd file from a system called dipperbso to a system called burritobso.

#tftp -p /etc/motd burritobso /tmp/motd

Example of the output:

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on en0, link-type 1, capture size 96 bytes

08:50:24.627840 IP dipperbso.52046 > burritobso.tftp: 21 WRQ "/tmp/motd" netascii

Source: IBM Technote

AIX MPIO error log information

SC_DISK_PCM_ERR1 Subsystem Component Failure

The storage subsystem has returned an error indicating that some component (hardware or software) of the storage subsystem has failed. The detailed sense data identifies the failing component and the recovery action that is required. Failing hardware components should also be shown in the Storage Manager software, so the placement of these errors in the error log is advisory and is an aid for your technical-support representative.

SC_DISK_PCM_ERR2 Array Active Controller Switch

The active controller for one or more hdisks associated with the storage subsystem has changed. This is in response to some direct action by the AIX host (failover or autorecovery). This message is associated with either a set of failure conditions causing a failover or, after a successful failover, with the recovery of paths to the preferred controller on hdisks with the autorecovery attribute set to yes.

SC_DISK_PCM_ERR3 Array Controller Switch Failure

An attempt to switch active controllers has failed. This leaves one or more paths with no working path to a controller. The AIX MPIO PCM will retry this error several times in an attempt to find a successful path to a controller.

SC_DISK_PCM_ERR4 Array Configuration Changed

The active controller for an hdisk has changed, usually due to an action not initiated by this host. This might be another host initiating failover or recovery, for shared LUNs, a redistribute operation from the Storage Manager software, a change to the preferred path in the Storage Manager software, a controller being taken offline, or any other action that causes the active controller ownership to change.

SC_DISK_PCM_ERR5 Array Cache Battery Drained

The storage subsystem cache battery has drained. Any data remaining in the cache is dumped and is vulnerable to data loss until it is dumped. Caching is not normally allowed with drained batteries unless the administrator takes action to enable it within the Storage Manager software.

SC_DISK_PCM_ERR6 Array Cache Battery Charge Is Low

The storage subsystem cache batteries are low and need to be charged or replaced.

SC_DISK_PCM_ERR7 Cache Mirroring Disabled

Cache mirroring is disabled on the affected hdisks. Normally, any cached write data is kept within the cache of both controllers so that if either controller fails there is still a good copy of the data. This is a warning message stating that loss of a single controller will result in data loss.

SC_DISK_PCM_ERR8 Path Has Failed

The I/O path to a controller has failed or gone offline.

SC_DISK_PCM_ERR9 Path Has Recovered

The I/O path to a controller has resumed and is back online.

SC_DISK_PCM_ERR10 Array Drive Failure

A physical drive in the storage array has failed and should be replaced.

SC_DISK_PCM_ERR11 Reservation Conflict

A PCM operation has failed due to a reservation conflict. This error is not currently issued.

SC_DISK_PCM_ERR12 Snapshot™ Volume’s Repository Is Full

The snapshot volume repository is full. Write actions to the snapshot volume will fail until the repository problems are fixed.

SC_DISK_PCM_ERR13 Snapshot Op Stopped By Administrator

The administrator has halted a snapshot operation.

SC_DISK_PCM_ERR14 Snapshot repository metadata error

The storage subsystem has reported that there is a problem with snapshot metadata.

SC_DISK_PCM_ERR15 Illegal I/O - Remote Volume Mirroring

The I/O is directed to an illegal target that is part of a remote volume mirroring pair (the target volume rather than the source volume).

SC_DISK_PCM_ERR16 Snapshot Operation Not Allowed

A snapshot operation that is not allowed has been attempted.

SC_DISK_PCM_ERR17 Snapshot Volume’s Repository Is Full

The snapshot volume repository is full. Write actions to the snapshot volume will fail until the repository problems are fixed.

SC_DISK_PCM_ERR18 Write Protected

The hdisk is write-protected. This can happen if a snapshot volume repository is full.

SC_DISK_PCM_ERR19 Single Controller Restarted

The I/O to a single-controller storage subsystem is resumed.

SC_DISK_PCM_ERR20 Single Controller Restart Failure

The I/O to a single-controller storage subsystem is not resumed. The AIX MPIO PCM will continue to attempt to restart the I/O to the storage subsystem.